This blog post looks at additional methodology not covered in Kevin Indigs’ article The first-ever UX Study of Google’s AI Overviews: The Data We’ve All Been Waiting. Be sure you read the article before looking at the granular information here. The piece on Growth Memo has the most pertinent methodology information.

Annotations and Coding

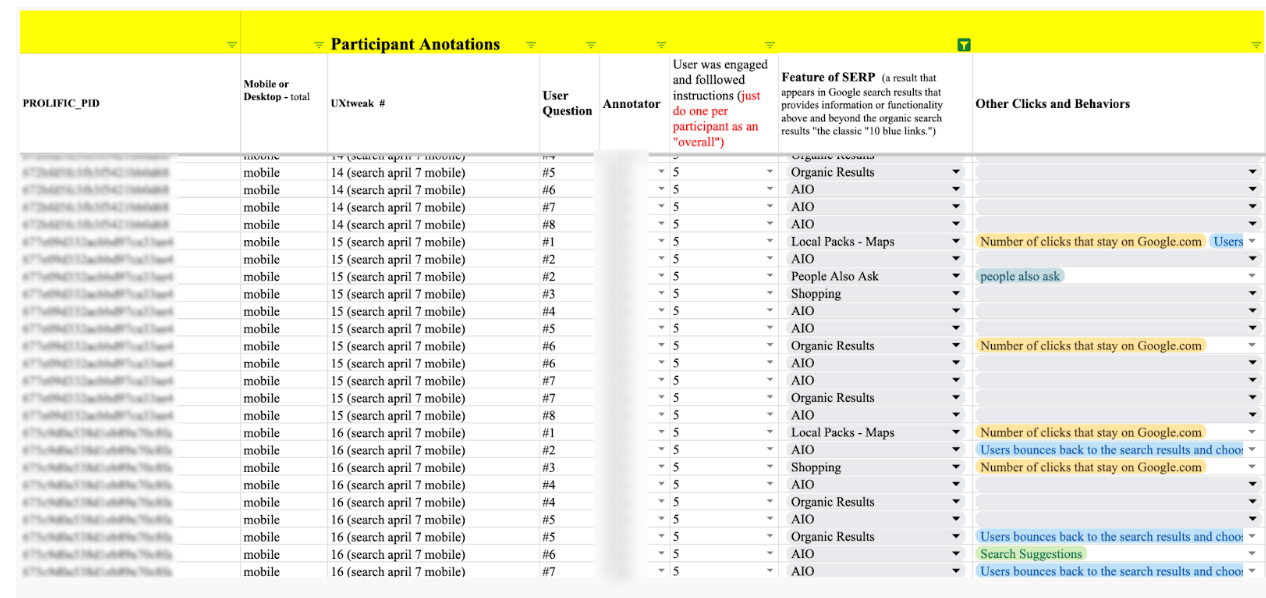

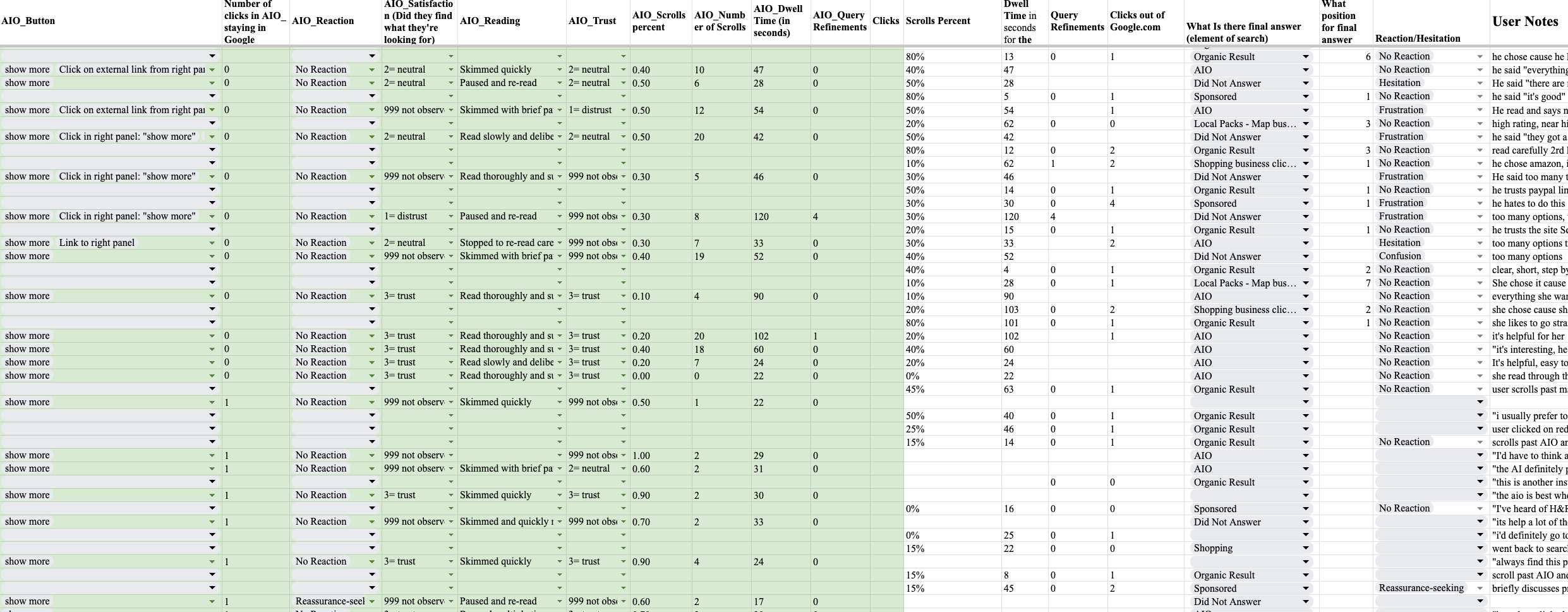

Our annotators systematically coded participant interactions, capturing detailed behaviors within Google’s AI Overview (AIO) feature and any other non-AIO SERP feature the user may have used. Annotators recorded SERP features only if participants’ cursors or gazes lingered for five seconds or more. The annotator recorded only the two longest dwells when a participant lingered on more than two search features for over five seconds.

We noted SERP features based on those that appeared when participants were completing their eight tasks:

|

SERP Feature |

Description |

|

Sponsored |

Paid advertisements that appear at the top or bottom of the search results page, often labeled as “Sponsored” or “Ad.” |

|

Organic Result |

Standard search result listing ranked by Google’s algorithm based on relevance, not paid placement. |

|

AIO (AI Overview) |

Google’s AI-generated summary or answer box that aggregates information from multiple sources and displays it at the top of SERP. |

|

Shopping |

Product listings, often in carousel format, showing images, prices, and merchant links for online shopping. |

|

People Also Ask (PAA) |

Expandable accordion boxes with questions related to the original query, each revealing a brief answer and source link. |

|

Shopping business clicked |

A specific instance where the user clicked on a business link within the Google Shopping results. |

|

Local Packs – Map business |

A section showing local businesses on a map with contact info, ratings, and direct links, triggered by location-specific queries. |

|

Featured Snippets |

Highlighted answers extracted from third-party websites, shown in a box above organic results to directly answer a query. |

|

Video Results |

A row of video thumbnails with links to video content (YouTube or other platforms) relevant to the query. |

|

Local Packs – Maps |

Map-based search result block showing several local businesses related to the query, including address, phone, and ratings. |

|

Discussion Forums |

SERP feature that surfaces links to community discussions or forums (e.g., Reddit, Quora) relevant to the search query. |

|

Paid results, Google Ads, Sponsored ads |

Paid advertisement appearing in search results, generally identical in function to “Sponsored,” labeled clearly as an ad. |

|

In-store availability |

SERP information showing whether a product is available for purchase at local physical stores, often with stock status indicators. |

|

From sources around the web |

Aggregated snippets showing content from multiple sources, typically highlighting mentions of the search topic across various sites. |

For AIO, shorter dwell times (as little as three seconds) were recorded if there was a meaningful interaction with an AIO block (ie, clicking a link) or if the SERP feature was where they found the answer to their task.

Annotators also documented additional participant interactions outside the primary interest feature, including clicks or expansions on the SERP (e.g., opening PAA sections or using filtering options). Each click that kept the participant within Google.com was individually tallied.

Annotators noted each participant’s final answer. They noted the rank (ie, SERP position) of the chosen final result. We did not track horizontal scroll positions for final answers (there were fewer than four of these in total). Positions were not assigned to AI Overviews.

For non-AIO interactions, annotators measured scrolling depth as a percentage of the entire results page while reviewing that feature (not just the search feature), dwell time on specific SERP elements, query refinements, and the number of clicks leaving Google.com that directed users to external websites.

This comprehensive coding approach allowed us to analyze user interactions systematically.

Measuring Dwell Time

Dwell time is the total time a user spends within the AIO block or SERP they are reviewing, regardless of interaction.

- Start Timer: when the user enters the AIO block (e.g., mouseover, viewport entry, focus event).

- Stop Timer: when the user leaves (e.g., scrolls away, blurs, or clicks outside).

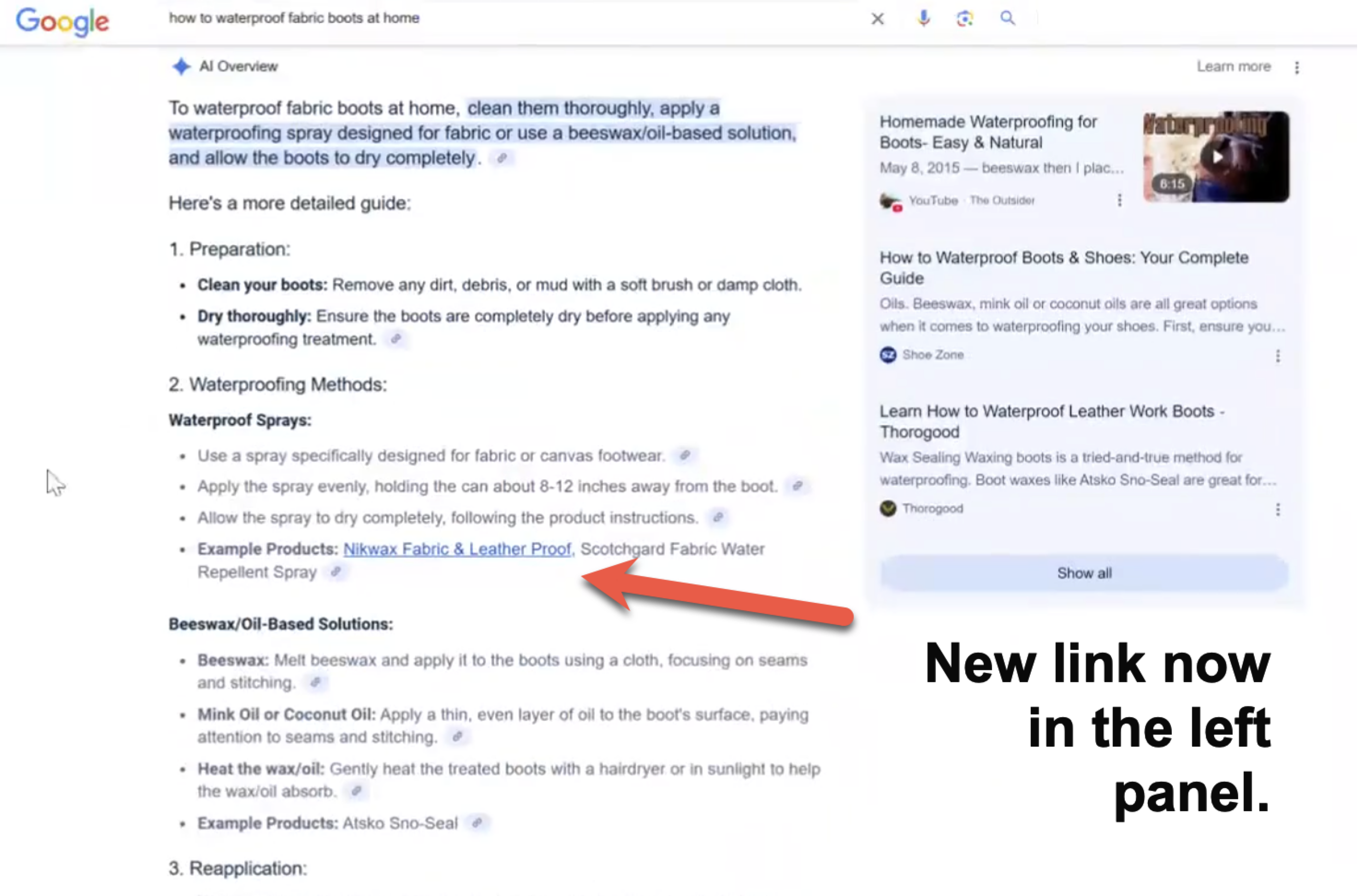

SERP Changes During Study

Between March and early April, Google added live outbound links to the left rail of AIO. For example, PayPal’s help article was added beside the “transfer PayPal money” query, Groupon/Budget/Avis links beside the “car‑rental promo codes” query, Mayo Clinic + Harvard Health on the artificial‑sweetener query, GC Buying/CardCash/Prepaid2Cash on the gift‑card query, and Travel & Leisure or Vessi on the waterproof‑boots query.

These site links did not appear at all in the March captures. We found there were no clicks on these new AIO links for participants who would have previously seen just link icons going to clickable external links on the right rail. The small number of changes did not impact the relevance of our results.

The classic organic stack also shifted: new first‑page winners include Mission Health and NIH (sweeteners), Travel & Leisure (waterproofing), GC Buying and KadePay (gift cards), plus Shoe Zone and Vessi (boots)—displacing earlier leaders such as Shoe Zone/Thorogood or CardCash‑only lists.

Other studies and research

Backlinko’s 2020 “How People Use Google Search” study recruited participants via Mechanical Turk to complete seven real-world search tasks spanning commercial, local, informational, and transactional intents. Researchers screen-recorded each session, and they hand-annotated for actions such as clicks, scrolling, query rewrites, and dwell time before aggregating the data for statistical patterns. They did not use a speak-out-loud protocol (the tech for that has improved even in the last six years) .

It was ground-breaking for its time. Since then, clickstream metrics aggregated by companies like Ahrefs and SimilarWeb can tell us much of what that study measured. That’s one of the main reasons we wanted to do a speak-out-loud protocol. Our main reason, of course, was to see what this wild animal, AIO, really looks like in the wild.

The emerging behavioral snapshot from Backlinko showed how dominant the first page, and especially content above the fold, had become. Only 9 % of users ever reached the bottom of page one, and a mere 0.44 % ventured to page two; most searches wrapped up in about 76 seconds without changing the original query and with very little pogo-sticking.

Findings from NN/g’s research (2019) illuminated how modern SERPs have become more complex and how this affects user behavior. They identified the “Pinball Pattern” of gaze, where instead of reading results top-down in order, users’ eyes bounce around the page non-linearly.

This was attributed to the rich features Google began presenting (images, knowledge panels, maps, ads, etc., not just text links). In fact, NN/g noted that the classic linear scan of the top few results was the exception rather than the norm. Users might look at a featured snippet, then flick their attention to a map on the right, then back to a lower organic result – much like a pinball bouncing between “attractors” on the page.

This insight is relevant to an AI-driven results page: an AI overview box is another strong visual element that can distract user attention from the traditional list of links. NN/g’s studies also measured how far down the SERP users tended to look and how often they reformulated queries.

NN/g’s participants were involved in moderated usability tests (in some cases, conducted in labs), which may differ from purely unmoderated natural behavior.

About Unmoderated Usability Studies

Unmoderated studies are a class of user research methods where participants complete a set of tasks or interactions with a digital product or platform on their own, without the direct guidance or observation of a researcher.

This approach contrasts with moderated studies, where a researcher actively facilitates the testing session, often asking questions and guiding the participant through specific scenarios. For marketers seeking to understand user behavior on expansive platforms, unmoderated testing presents several distinct advantages.

One of the primary benefits of unmoderated studies is their ability to scale and reach a broad audience. These tests can be deployed to a large and diverse group of participants across geographical locations, allowing for the collection of feedback from a wide range of users. This scalability is often coupled with cost and time efficiency.

Unmoderated tests generally require less financial investment and can be conducted more rapidly compared to moderated studies, as they eliminate the need for extensive scheduling and direct researcher involvement during testing sessions.

Furthermore, the absence of a moderator can lead to a reduction in observer bias. Participants may exhibit more natural behaviors and provide more genuine feedback when they are in their environment, rather than under the direct scrutiny of a researcher. Many unmoderated testing platforms also facilitate the collection of quantitative data, such as task completion rates, time on task, and navigation paths, providing measurable insights into user performance.